Talk:Mod:zazu

JC: General comments: Good write up with clear and concise explanations of your results. You demonstrate a good understanding of the material, but show your working for calculations.

This computational experiment investigates the structural and dynamic properties of a simple liquid through the use of molecular dynamics (MD) simulations. MD simulations enable us to calculate how a particular set of atoms move over time. Using statistical physics, the positions, velocities and forces of the atoms can be used to determine quantities such as temperature and pressure. Further investigations are made into the heat capacities, radial distribution functions and diffusion coefficients for systems in the solid, liquid and vapour phases.

Theory

The Classical Particle Approximation

In order to be able to simulate a real system of atoms, some approximations have to be made; for anything more complicated than a hydrogen atom, the Schrödinger equation describing its behaviour is impossible to solve exactly without such approximations.

Assuming that atoms behave as classical particles provides a very good approximation of a system. For a system consisting of atoms, each one will interact with the others, thus feeling a force. According to Newton's second law, this force results in an acceleration of the atom, as outlined in the following equation:

Where:

= the force acting on atom i = the mass of atom i = the acceleration of atom i (the rate of change of its velocity) = the velocity of atom i = the position of atom i

From this second order differential equation, the atomic positions and velocities can be determined at any desired time, so long as it is known how the force behaves as a function of time. A system consisting of atoms has of these equations (one for each atom). Attempting to solve all of these equations by hand would be extremely impractical, thus computer simulations are employed.

JC: These N equations are coupled since the force on atom i depends on the positions of all other atoms, so the equations cannot be solved analytically.

Numerical Integration

In order to numerically solve these equations, the simulation needs to be broken up into a series of discrete timesteps, rather than treating the atomic positions, velocities and forces as continuous functions of time. Each timestep has a length of . The two algorithms outlined below are derived from the Taylor expansion of the position or velocity of the atoms at the timestep .

Verlet Algorithm:

denotes the position of an atom, , at time .

Velocity Verlet Algorithm:

denotes the velocity of an atom, , at time .

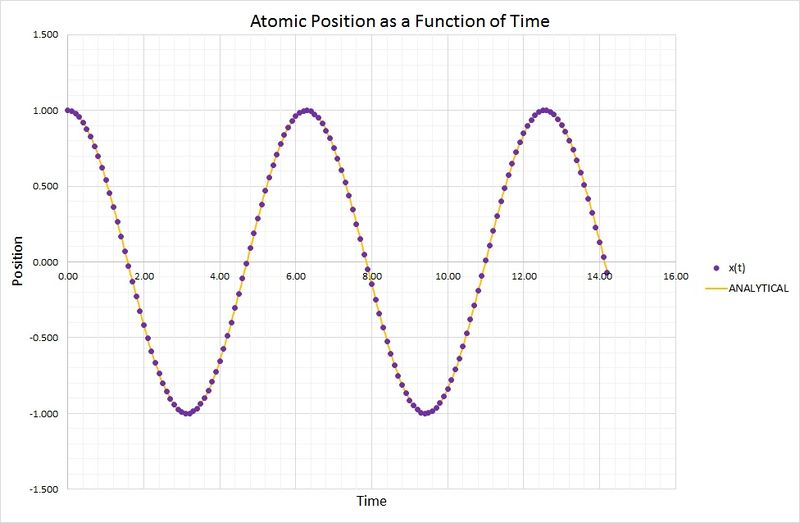

Smaller values of timestep provide numerical approximations which are closer to the real function, the classical harmonic oscillator. The following graph compares the results obtained for position as a function of time, using the velocity-Verlet algorithm and the harmonic oscillator:

As you can see, the two methods are in very close agreement. The harmonic oscillator values were calculated using the following equation:

where and .

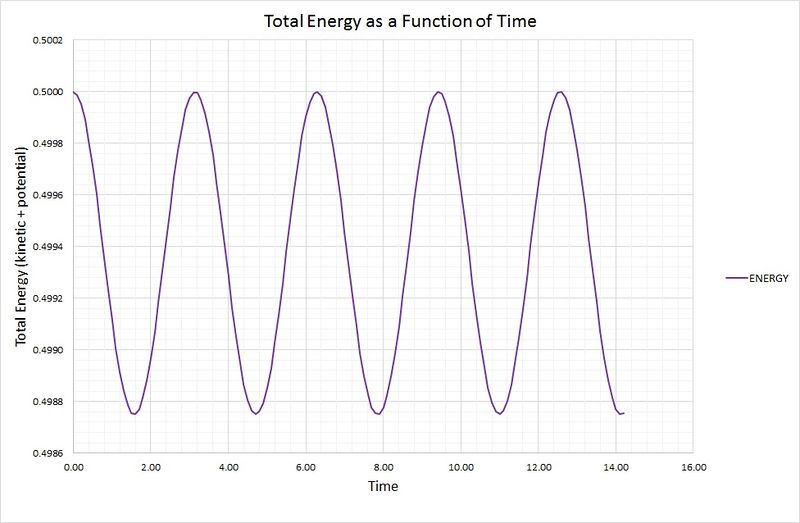

The total energy of the oscillator for the velocity-Verlet solution is equal to the sum of the kinetic and potential energies, as outlined in the following graph:

The potential energy term was found using the equation , where the force constant,, is equal to 1. The kinetic energy term was calculated using , where the mass, is equal to 1.

Below is a plot showing the difference (error) between the results from the harmonic oscillator and the velocity-Verlet algorithm:

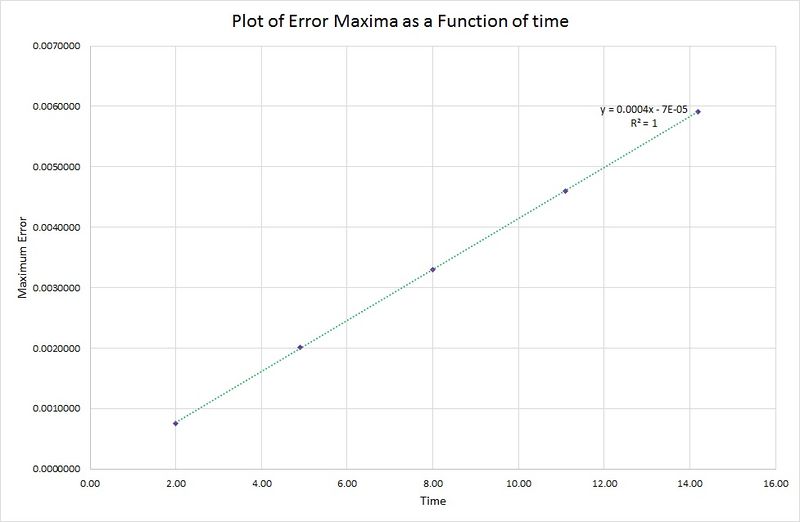

As time increases, the maximum in the error also increases. This is due to the harmonic oscillator having a slightly shorter wavelength than the one contained in the algorithm, so as each period of the wave passes, this difference increases. This relationship is highlighted in the following graph where the peak maxima of the error are plotted against time:

JC: The increase in the error over time is due to accumulation of error as the algorithm gradually departs from the analytical solution. Did you decide what timestep could be used to ensure that total energy varied by less than 1%?

There is a linear relationship between the error data for the harmonic oscillator and the algorithm, therefore the period of oscillation in the position/time graph must be constant.

Atomic Forces

The force acting on an object is governed by the potential that it experiences:

Single Lennard-Jones interaction:

The separation at which the potential energy is zero can be found by equating the single Lennard-Jones interaction to zero, and solving for . The following results:

The force at this separation is found by differentiating the L-J potential, and leads to this result:

To find the equilibrium separation, the derivative of the potential energy is set to zero and solved for :

The well depth is equal to the potential energy at the equilibrium separation and thus can be found by substituting the value for into the L-J potential equation and rearranging:

Evaluation of the following integrals when leads to:

JC: Answers correct, but show your working.

Periodic Boundary Conditions

Taking the concentration of water under standard conditions to be , the number of water molecules in 1 ml of water under standard conditions is therefore approximately . The volume of 10000 water molecules under standard conditions is approximately . As you can see, this is a remarkably small volume: too small to be measured in a laboratory environment. This illustrates in a real world application just how small a typical simulation is (where the number of particles, , is normally between and ).

JC: Again correct, but show your working.

An atom at position in a cubic simulation box, which runs from to along the vector in a single timestep, will end up at the point once the periodic boundary conditions have been applied.

Periodic boundary conditions result in a simulation of an infinite lattice, which when calculating the potential would include an infinite number of pair interactions. This is both impractical for the simulations and unnecessary because after a certain interatomic distance, the integral of the Lennard-Jones potential becomes negligible, thus a cutoff value is applied. The three example integrals given above show the insignificance of the energy contribution when integrating from the cutoff value to infinity.

Reduced Units

By convention, reduced units are used when working with Lennard-Jones interactions; all quantities are divided by scaling factors. This converts values into convenient forms from what would otherwise be rather lengthy (e.g. distances are divided by , generating a value that's typically around instead of ).

These reduced units are denoted by an asterisk, and undergo the following conversions:

Distance: Energy: Temperature:

For Argon, the Lennard-Jones parameters are and .

The LJ cutoff is , which in real units is .

The well depth is .

The reduced temperature . In real units, .

JC: Correct.

Equilibration

The Simulation Box

Before an MD simulation can take place, the initial states of all the atoms in that system must be known. These defined states can vary depending on the method of numerical integration required, but as a general rule, at least the starting position of each atom needs to be specified. LAMMPS is able to generate crystal lattice structures according to the associated input command; for a solid crystal this is reasonably simple, whereas it becomes more complicated to generate coordinates for atoms contained in a liquid. The Brownian motion of a liquid results in an absence of long-range order, meaning that there is no single point of reference from which to work out the positions of all other atoms. Giving atoms random starting coordinates would cause problems in simulations because there is a possibility of several atoms being assigned coordinates in such close proximity that they overlap. Atomic overlap of this nature would generate huge repulsion potentials and raise the energy of the system by an amount unrepresentative of a real liquid system.

JC: A simulation starting from a configuration with high repulsion is likely to be unstable and to crash unless a much smaller timestep is used.

Formation of a simple cubic lattice, although an unlikely situation for the system to be found physically, provides a starting point for the simulations. The command:

lattice sc 0.8

creates a grid of points which form the simple cubic lattice, consisting of one lattice point per unit cell. The value of specifies the number density, which is the number of lattice points per unit volume. Thus:

From the output file of a simulation, the following line could be seen:

Lattice spacing in x,y,z = 1.07722 1.07722 1.07722

This corresponds to the distance (in reduced units) between points of the specified lattice type. Using a rearrangement of the above formula, it can be seen how this lattice spacing corresponds to a number density of :

If instead we consider a face-centred cubic (fcc) lattice (which has 4 lattice points) with a number density of , the side length of the cubic unit cell becomes:

An input command of:

region box block 0 10 0 10 0 10 create_box 1 box create_atoms 1 box

would generate a simulation box consisting of 1000 (10x10x10) unit cells of the lattice type being used. Considering an fcc lattice again, this would correspond to 4000 atoms being generated, as there are 4 atoms per unit cell.

JC: Correct.

Defining Atomic Properties

Along with the positions of the atoms, their physical properties are also needed for a successful simulation.

The following commands appear in the input script:

mass 1 1.0 pair_style lj/cut 3.0 pair_coeff * * 1.0 1.0

The first of these corresponds to the mass of atoms of type 1, the value of which is 1.0. The second line specifies the type of interactions between pairs of atoms; in this case the interaction type is the Lennard-Jones potential. The "cut 3.0" refers to the cutoff point of the Lennard-Jones potential; any interactions beyond are not considered. In the final line, "pair_coeff" relates to and , respectively, with " * * " meaning that the entire system of atoms are to be considered. "1.0 1.0" are the values of the specified parameters.

JC: Good explanation of the "cut" in the pair_style command.

One more property still needs to be defined: the velocity of each atom. Given that and are being specified, the velocity Verlet integration algorithm needs to be implemented.

Running the Simulation

The following lines instruct LAMMPS to input a timestep value of 0.001 whenever it encounters the text "${timestep}" on a subsequent line.

### SPECIFY TIMESTEP ###

variable timestep equal 0.001

variable n_steps equal floor(100/${timestep})

variable n_steps equal floor(100/0.001)

timestep ${timestep}

timestep 0.001

### RUN SIMULATION ###

run ${n_steps}

run 100000

Although this may seem rather a long instruction, it is considerably easier to use this variable definition of the timestep, rather than just using:

timestep 0.001 run 100000

If only these two lines of code were used, changing the timestep would require manual modification at every point it appears in the script. The variable version only requires the first input value to be changed, whilst also ensuring that the overall length of time for the simulation is kept constant, no matter what the value of the timestep is.

Checking Equilibrium

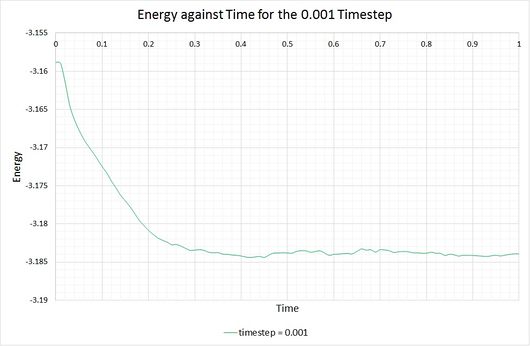

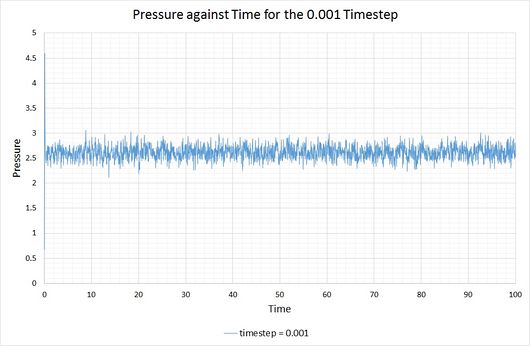

By plotting the thermodynamic data from a simulation output, it can be seen whether or not a system reaches the equilibrium state, and if so, how long it takes to get there. The following graphs show these equations of state, alongside a magnified snapshot to ease analysis:

From these graphs it is shown that the simulation does indeed reach equilibrium with a timestep of 0.001, as a constant (average) value is maintained for the energy, temperature and pressure of the system. From investigation of the magnified graphs, the time at which the equilibrium state is reached can be identified. It appears to vary slightly between the three parameters, therefore we will select the value corresponding to the latest point in time, this being for the energy plot at approximately .

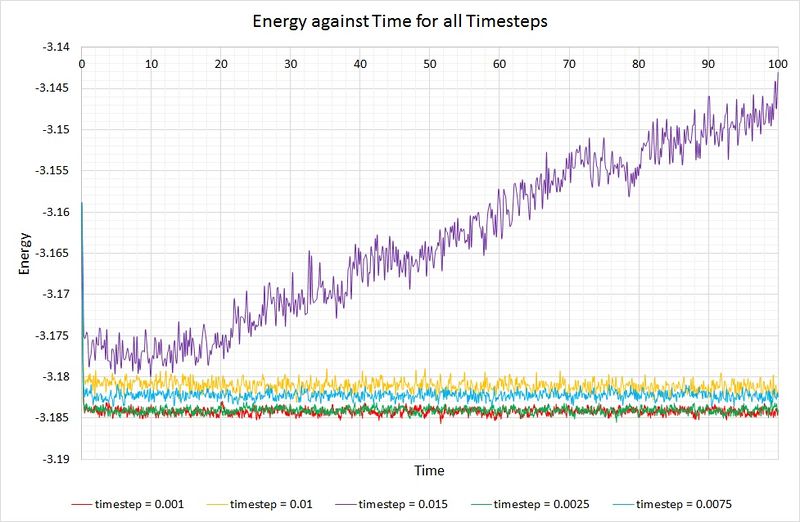

Now let's compare the energies of a system simulated with different timesteps:

JC: Good idea to see how quickly equilibrium is reached.

Shorter timesteps produce results which more accurately describe the physical reality of a simulation. However, this comes at a cost; the same number of simulation steps cover a shorter amount of real time compared to larger timesteps, limiting the length of observation. The largest timestep which generates reasonable results is 0.01, because the system has been able to reach equilibrium. In contrast, the 0.015 timestep (the largest of them all) has failed to reach the equilibrium state and is therefore a particularly bad choice of timestep for simulation. The best choice of timestep here would be 0.0025 because its energy is most comparable to that produced by 0.001 (the smallest timestep producing the most accurate results), whilst providing a longer overall simulation time.

JC: Good choice of timestep. Remember that the energy of the system shouldn't depend on the timestep, so timesteps above 0.0025 are not suitable.

Running Simulations Under Specific Conditions

Ensembles

Up until this point, the simulations have been described by a microcanonical ensemble (also know as NVE ensemble), derived from statistical mechanics. The macroscopic variables N (total number of atoms), V (volume of the system) and E (total energy) are held constant.

The following simulations have been modified to run under the conditions of an isobaric-isothermal (NpT) ensemble, leading to an equation of state for the model fluid at atmospheric pressure.

Thermostats and Barostats

According to the equipartition theorem from statistical thermodynamics, every degree of freedom in a system at equilibrium will, on average, have of energy. In a system consisting of atoms, each with 3 degrees of freedom, the following equations for kinetic energy are obtained:

From this, the instantaneous temperature, , can be determined after every timestep. will fluctuate and differ from the target temperature, , specified in the input script. Changing the temperature by multiplying by a constant factor enables the kinetic energy of the system to be controlled:

- If , then is too high and needs to be reduced

- If , then is too low and needs to be increased

In order to ensure that the temperature is correct , a solution for must be determined. This can be done by solving the following equations:

The solution:

→

→

→

Thus:

To control the pressure of the system, the pressure is calculated at each timestep and if it is too high, the size of the simulation box is increased. Similarly, the box is decreased if the pressure is too low. For this reason, the volume of the simulation box is not constant, thus the simulations are in the NpT ensemble.

JC: Good understanding.

Examining the Input Script

The following lines are taken from one of the input scripts:

fix aves all ave/time 100 1000 100000 v_dens v_temp v_press v_dens2 v_temp2 v_press2 run 100000

The fix command tells LAMMPS which simulated thermodynamic properties to compute and generates the average value for each one. The three numbers that follow (100 1000 100000) relate to the frequency of sampling (Nevery, i.e. use input values every 100 timesteps), the number of samples to incorporate in the average (Nrepeat, i.e. use input values 1000 times for calculating averages) and how frequently to calculate the averages (Nfreq, i.e. calculate averages every 100000 timesteps).

The second line (run 100000) dictates how long to run the simulation for; as the timestep has been set at 0.0025, the simulation will run for 250 units of reduced time.

Plotting the Equations of State

From rearrangement of the ideal gas law, the density can be determined:

→

As all calculations are performed using reduced units, in this instance, therefore the expression for density simplifies to:

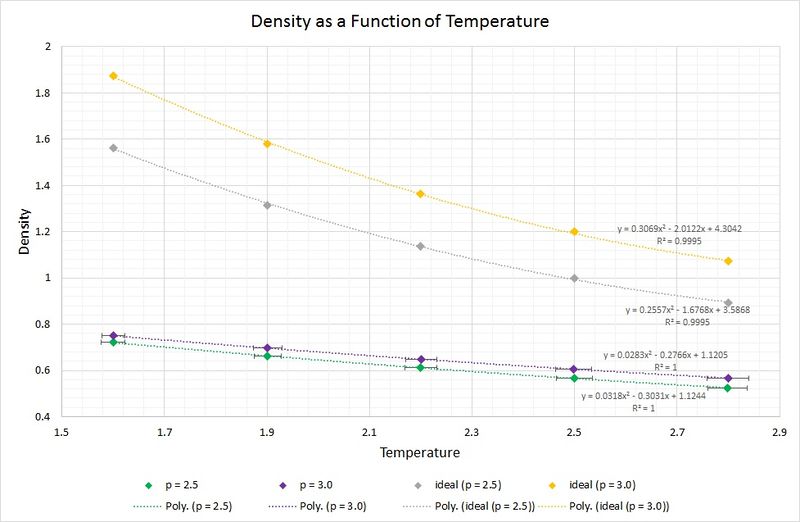

The following graph displays the densities plotted as a function of temperature for the two pressures simulated (2.5 and 3.0), as well as the densities predicted by the ideal gas law at the same pressures. Whilst the experimental data points include error bars for both parameters plotted, the ones for the densities are not visible as the standard error is so small (approx. +/-0.004 reduced density units). Looking at the temperature error bars, it can be seen that the standard error increases with increasing temperature.

JC: Why do you use a polynomical fit for the ideal gas data point? You know the equation that relates density to temperature for the ideal gas.

The data from the simulations produce densities which are considerably lower than those predicted by the ideal gas law. This can be rationalised by the fact that the ideal gas law makes a series of assumptions, including an absence of intermolecular interactions. In the simulations of the liquid these assumptions have not been made, and instead, Lennard-Jones interactions such as short-range repulsions arising from orbital overlap between atoms, are taken into account. As a result of this, the atoms are found to be at a greater separation, thus the density is lower.

As the pressure is increased from 2.5 to 3.0, the discrepancy between the ideal and simulated data increases. This can be attributed to the aforementioned short-range repulsions which are more prevalent in a higher pressure system, as the atoms are closer together on average, and thus experience a greater repulsion. Moreover, at the higher pressure of 3.0, the assumptions made by the ideal gas model result in a greater deviation from the simulated data than that found with the pressure of 2.5.

One other observation is the convergence between the simulated and ideally predicted data as the temperature increases. A rise in temperature leads to a rise in kinetic energy; begins to dominate and as it does so, the intermolecular repulsions become increasingly negligible. Consequently, the ideal gas law begins to describe the system more accurately.

Heat Capacity

Below is an example of one the input scripts used to simulate a liquid in density-temperature phase space, in order to calculate the heat capacity. The simulations were run under the NVT ensemble.

### DEFINE SIMULATION BOX GEOMETRY ###

lattice sc 0.2

region box block 0 15 0 15 0 15

create_box 1 box

create_atoms 1 box

### DEFINE PHYSICAL PROPERTIES OF ATOMS ###

mass 1 1.0

pair_style lj/cut/opt 3.0

pair_coeff 1 1 1.0 1.0

neighbor 2.0 bin

### SPECIFY THE REQUIRED THERMODYNAMIC STATE ###

variable T equal 2.0

variable timestep equal 0.0025

### ASSIGN ATOMIC VELOCITIES ###

velocity all create ${T} 12345 dist gaussian rot yes mom yes

### SPECIFY ENSEMBLE ###

timestep ${timestep}

fix nve all nve

### THERMODYNAMIC OUTPUT CONTROL ###

thermo_style custom time etotal temp press

thermo 10

### RECORD TRAJECTORY ###

dump traj all custom 1000 output-1 id x y z

### SPECIFY TIMESTEP ###

### RUN SIMULATION TO MELT CRYSTAL ###

run 10000

unfix nve

reset_timestep 0

### BRING SYSTEM TO REQUIRED STATE ###

variable tdamp equal ${timestep}*100

fix nvt all nvt temp ${T} ${T} ${tdamp}

run 10000

unfix nvt

fix nve all nve

reset_timestep 0

### MEASURE SYSTEM STATE ###

thermo_style custom step etotal temp press density

variable temp equal temp

variable etotal equal etotal

variable etotal2 equal etotal*etotal

fix aves all ave/time 100 1000 100000 v_temp v_etotal v_etotal2

run 100000

variable avetemp equal f_aves[1]

variable aveetotal equal f_aves[2]

variable aveetotal2 equal f_aves[3]

variable cv equal (v_aveetotal2-v_aveetotal*v_aveetotal)/(v_avetemp*v_avetemp)*atoms*atoms/vol

print "Averages"

print "--------"

print "Temperature: ${avetemp}"

print "Heat Capacity: ${cv}"

According to statistical thermodynamics, the heat capacity of a system can be determined from the magnitude of the fluctuations about the average equilibrium value for the total energy, whilst the temperature is held 'constant'. The following equation describes this relationship:

is the number of atoms and is the variance in which relates to the fluctuation about equilibrium.

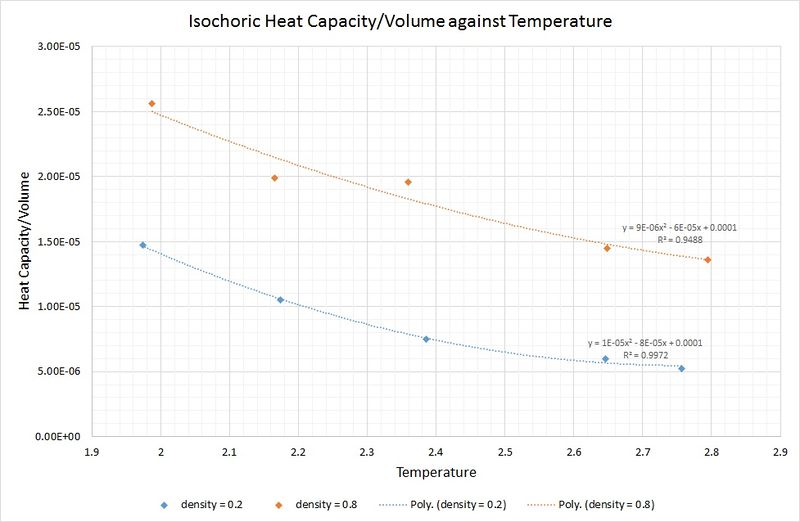

The graph below shows that an increase in density leads to an increase in the isochoric heat capacity:

This is as you would expect, because a system of fixed volume with a higher density contains more atoms, thus a greater amount of thermal energy is required to raise the temperature of the system by the same amount as that of a lower density.

Perhaps surprisingly, the heat capacity decreases as the temperature increases. One rationale for this is that at higher temperatures there is an increase in the density of states for the system (i.e. there are a greater number of occupationally available states per interval of energy at each energy level). This means that heat transfer to the system is made more facile as less energy is needed to proportionally populate each energy level.

JC: Good explanations, more detailed analysis would be needed to give a detailed explanation of the behaviour with temperature.

Radial Distribution Function

In order to ascertain what values of temperature and density to use for the simulations of solid, liquid and vapour phases of the Lennard-Jones system, a phase diagram for the Lennard-Jones system was analysed. To make the results more comparable, the selected temperature was kept at 1.2 (in reduced units) for all three phases. The corresponding densities were as follows:

Vapour density = 0.01

Liquid density = 0.8

Solid density = 1.2

The output trajectory files from these simulations were used to calculate the radial distribution functions (RDFs).

The RDF describes the probability that an atom can be found at a certain distance from a reference atom; it is an indication of any long-range order within a system.

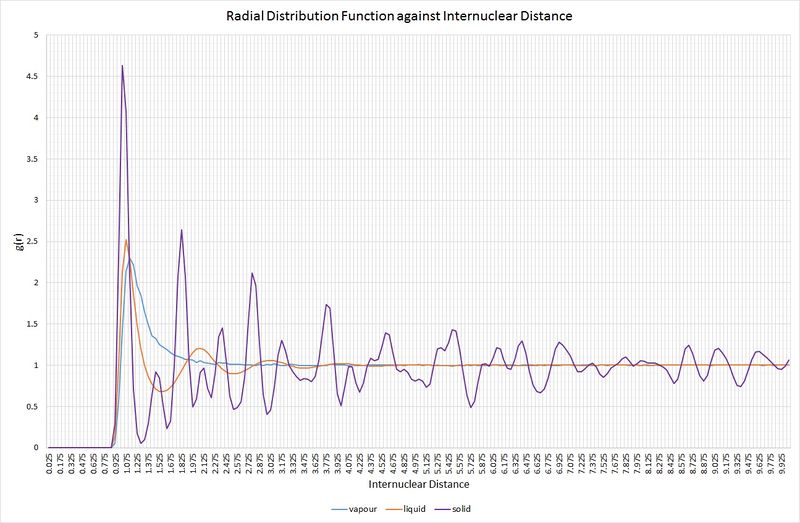

The following graph shows the RDFs for the vapour, liquid and solid phases from the simulations:

Comparison of the RDFs for each of the three systems highlights some key structural features. Firstly, the vapour phase holds no long-range order, as indicated by the fact that it only has one maximum in the RDF, rapidly decaying to a minimum (at ) beyond an internuclear separation greater than approximately 1.2. For the liquid phase, there are three distinct maxima whose amplitudes significantly decrease with increasing internuclear separation. This decrease in amplitude as the RDF approaches one is representative of an absence of long-range order within the system, but it is more ordered than the vapour. In a solid, the RDF has an infinite number of sharp peaks, the separations and heights of which are characteristic of its lattice structure. The solid simulated here demonstrates the RDF of an fcc lattice. Over the range of the simulation, a constant minimum has not been reached, signifying considerable long-range order, as one would expect for a solid. At short distances (less than one atomic diameter) for all three phases; the probability of finding two atoms at or below this separation is zero. This is a result of the strong repulsive forces between atoms. The varying peak broadness between phases is also significant; the solid RDF exhibits the narrowest peaks because a solid experiences minimal random atomic motion compared to a liquid and a vapour.

An experimental determination of the RDF can be carried out using X-ray diffraction, via analysis of the diffraction patterns. A solid would generate an X-ray diffraction pattern with bright, sharp spots, characteristic for the regular arrangement of atoms in a crystal, whilst a liquid would create a pattern with regions of low and high intensity without sharp spots. Such experimental distribution functions could then be compared to those generated by simulations.

JC: Good understanding of the RDFs for the different phases and on how they can be connected to experimental results.

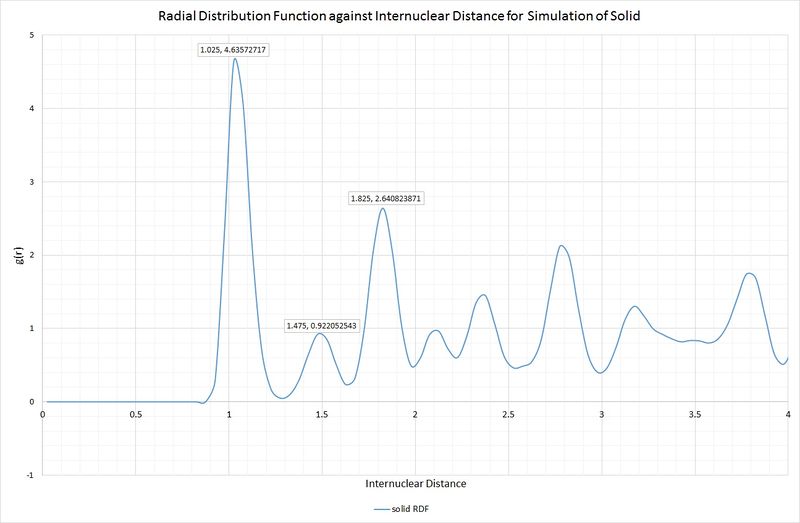

Lattice sites, lattice spacing and coordination numbers can be determined from the RDF peaks. We will take the first three peaks of the solid RDF to illustrate this:

Intuitively, one would expect these peaks to correspond to the three shortest interatomic distances within the unit cell of an fcc lattice. Below is a scheme illustrating this unit cell, and the corresponding distances:

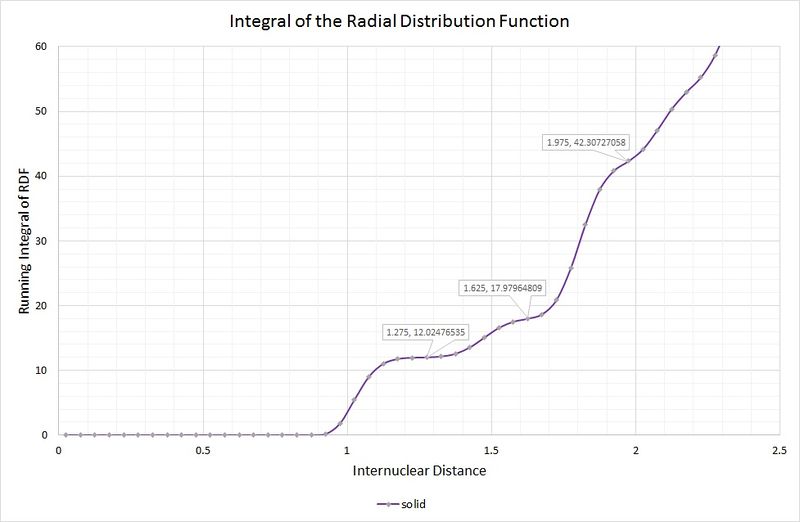

A, B and C are at distances of 1.025, 1.475 and 1.825, respectively, from the reference atom. The lattice spacing is therefore 1.475 as B corresponds to the length of the unit cell. The coordination numbers can be found by inspection of the unit cell, or by inspection of the integral of the RDF, as shown in the following graph:

JC: What about the first and last peaks (A and C)? Can you also calculate the lattice parameter from these values and does it agree with the second peak?

The first coordination sphere (A) consists of 12 atoms, which can be read from the first highlighted point on the graph where the curve plateaus. The second coordination sphere (B) contains 6 atoms; the integral is a running total, therefore the number of atoms from the previous sphere need to be subtracted (i.e. 18 - 12 = 6). The final sphere (C) contains 24 atoms, by the same method.

JC: Can you also count the coordination numbers directly from the fcc structure?

Dynamical Properties and the Diffusion Coefficient

The diffusion coefficient, , describes the movement ability of atoms in a system. In the discussion that follows, two different methods have been used to determine : mean squared displacement and velocity autocorrelation function.

The temperature and density parameters used were the same as in the previous section:

Temperature = 1.2 for all three phases

Vapour density = 0.01

Liquid density = 0.8

Solid density = 1.2

Mean Squared Displacement

is related to the mean squared displacement (MSD) by the following equation:

To get the final value for we must divide by the simulation timestep, which in this case is 0.002.

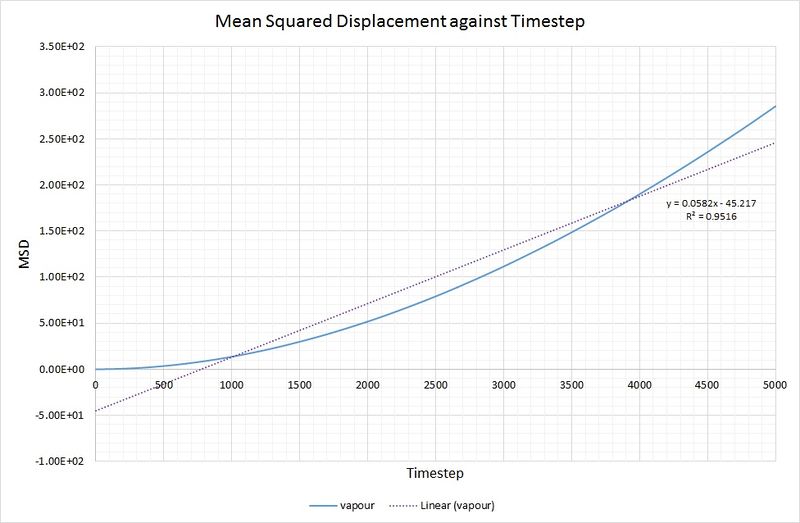

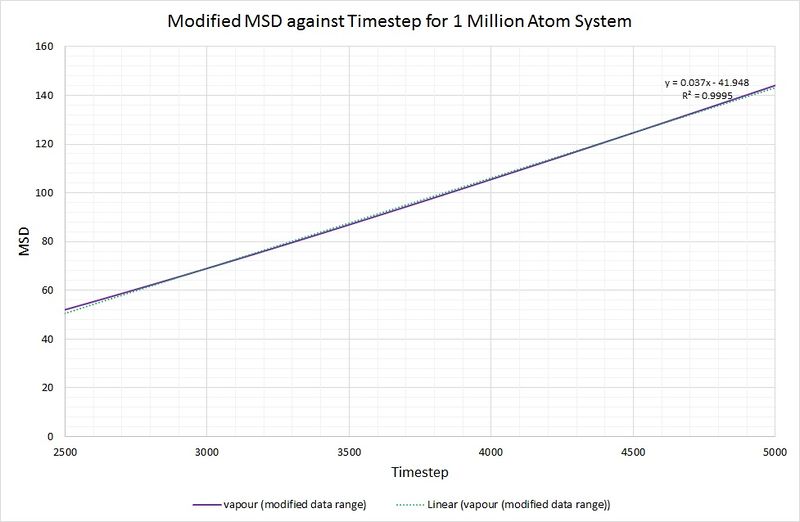

As you can see, there is not a particularly good agreement between the linear fit and the vapour phase plot for MSD against timestep:

To rectify this, making the calculation of more accurate, the data range used has been limited to 2500-5000 for the timestep:

JC: Use an even smaller range of x values to make sure that you are only fitting to the linear part of the graph, where your simulation has reached the diffusive regime.

Now that the linear fit is more appropriate (as indicated by a higher value of R squared), we can calculate by taking the gradient of the line, dividing by the timestep and multiplying by :

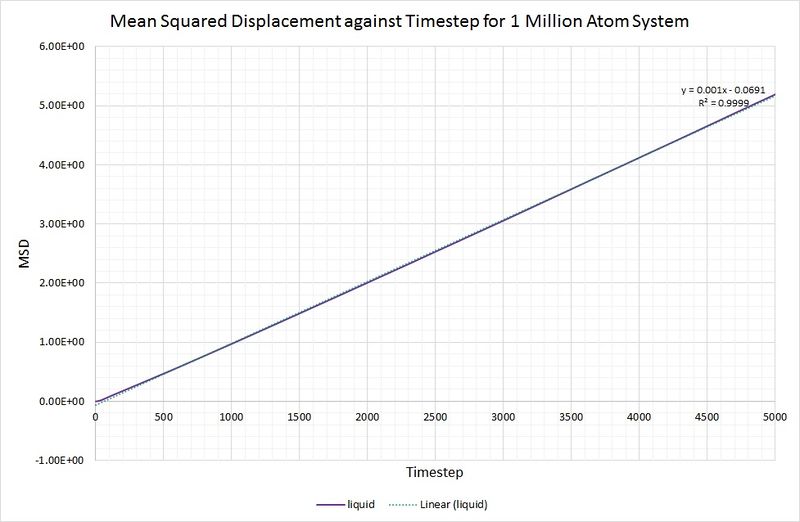

The same method is used to determine the diffusion coefficient for the liquid (), but without the need for data range modification:

This value is considerably lower than that obtained for the vapour phase, which is as you would expect because a gas is far less dense than a liquid, and the gaseous atoms can therefore diffuse much further and more easily.

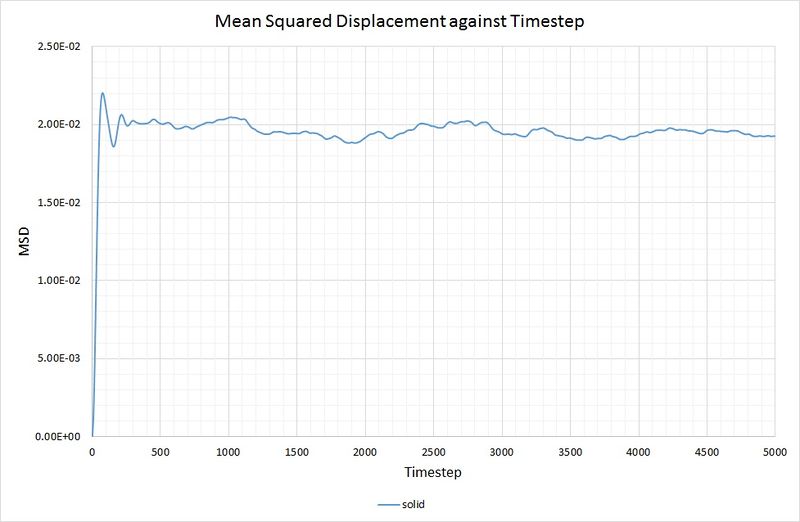

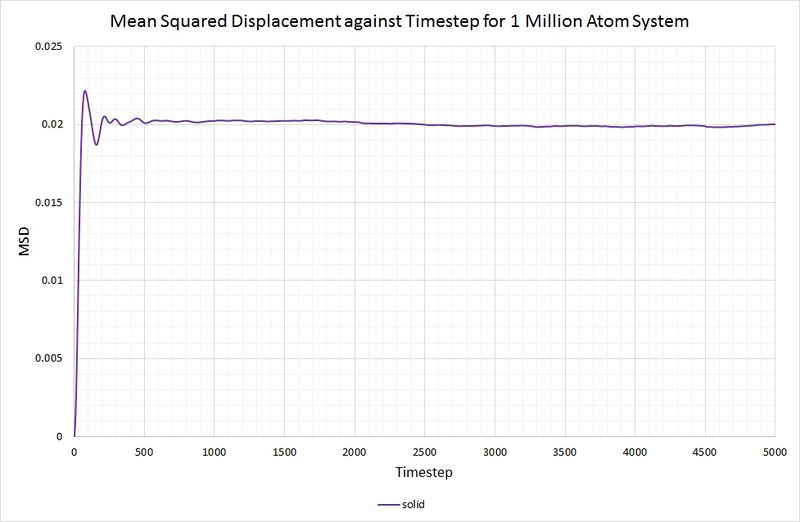

For the solid phase, the gradient of the curve fluctuates, but is approximately zero, thus . Again, this is as you would expect, because the atoms in a solid have some motion as they vibrate, but they cannot diffuse through the crystal because each one collides with its nearest neighbours. The graph for the solid phase MSD is shown below:

For comparison, the same method has been employed on the data generated by a larger system containing 1,000,000 atoms. The graphs can be seen below:

Summary of diffusion coefficients for both systems:

| 8,000 atom system, | 1,000,000 atom system, | |

| Lennard-Jones vapour | 6.89 | 3.08 |

| Lennard-Jones liquid | 0.0833 | 0.0833 |

| Lennard-Jones solid | 0 | 0 |

for both the small and large system. As mentioned before, this is because the function of the MSD reaches an average constant value (around 0.02). A zero gradient means that an infinite amount of time is needed for the atoms to vary their MSD, thus it can be deduced that there is approximately no diffusion in the solid in either simulation.

The two systems result in the same value for . From this, it can be deduced that 8,000 atoms is a large enough system to generate accurate results.

There is a large disagreement between the values of , most likely arising from a difference in density between the two simulations. A higher diffusion coefficient relates to a lower density as particles can diffuse more rapidly, thus the smaller system here has a lower density. Both graphs for the vapour phase MSDs have an initial non-linear trend. This is a result of the particles' momenta initially dominating over the time-dependent diffusion coefficient. As more time passes, the systems equilibrate as becomes constant, and the graphs begin to display linearity.

JC: Great analysis.

Velocity Autocorrelation Function

Our second method for determining involves using the velocity autocorrelation function (VACF). The VACF describes the change in velocity of an atom with respect to time. The time at which the velocity becomes uncorrelated is denoted as , and is the difference between the velocity at time and time . The integral of this function is what leads to determining the diffusion coefficient.

Normalised VACF for a 1D Harmonic Oscillator

The VACF:

This can also be written as:

The equation defining position in a classical harmonic oscillator can be used to solve the VACF, first by differentiating to get the equation for velocity:

We also need the following expressions to substitute into the integral:

Substituting these identities into the equation for the VACF gives:

which can be simplified to:

where:

Using the trigonometric identity:

the following expression is obtained:

which simplifies to:

The trigonometric identities:

are then rearranged for and , respectively, and substituted into the previous equation:

Sine is an odd function, therefore its integral over infinite space is zero and this term cancels out of the equation, leading to the final result for the normalised velocity autocorrelation function for a 1D harmonic oscillator:

JC: Good derivation. An odd function multiplied by an even function is also an odd function, so cos(x)sin(x) is already an odd function. The integral of an odd function is zero as long as the limits are symmetric about x=0.

Diffusion Coefficients from VACF

The simulations performed previously for the MSD also provided data for the VACF, thus calculations of for the solid, liquid and vapour phases have been done for both the 8,000 atom system and the 1,000,000 atom system.

The VACFs for the solid and liquid from the 8,000 atom system were plotted against timestep, also with the VACF for the classical harmonic oscillator, with :

It can be seen from this graph that the correlation between velocities for the liquid and solid decreases as time passes, which is a result of collisions between particles. The Lennard-Jones solid experiences a few oscillations about VACF = 0, with a decrease in amplitude each time. This damped oscillation arises due to the frequent collisions. The Lennard-Jones liquid displays only one minimum as particle collisions are less frequent for a system of lower density.

Van der Waals forces of attraction occur between atoms of intermediate distance in the Lennard-Jones solid and liquid, which result in repulsions when the atoms get too close, but it is the collisions between atoms that cause a change in velocity, leading to the minima in the graphs when the product of the velocities is at a maximum (but negative due to the opposing directions).

There are no collisions involved for a harmonic oscillator, hence it oscillates periodically without being damped and looks vastly different to the liquid and solid VACFs.

The VACF for the vapour phase is absent here, because it would simply give an almost perfectly horizontal line. This is because the vapour is so diffuse that no collisions would occur in the time-frame under study.

The diffusion coefficient is related to the VACF by the following equation:

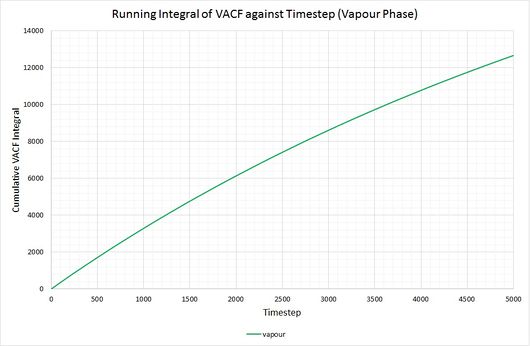

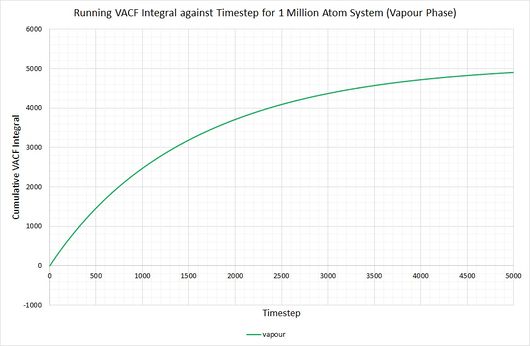

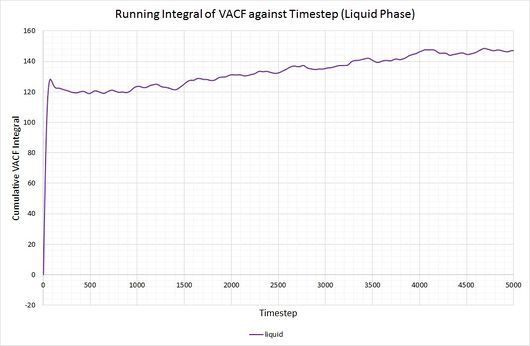

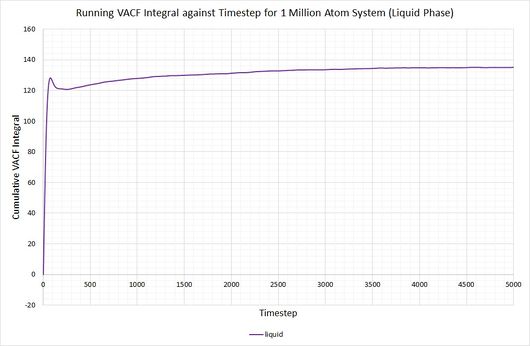

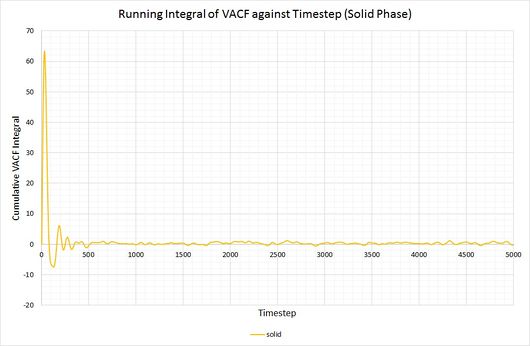

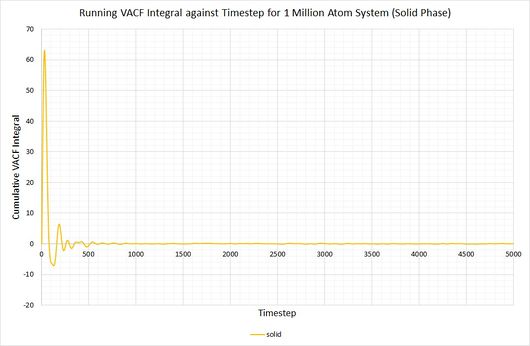

By plotting a running integral of the VACF (using the trapezium rule) against timestep, the final value can be used to determine the diffusion coefficient. The following graphs were obtained for the system of 8,000 atoms and the 1,000,000 atom system:

By taking the final value of each running integral, dividing by 3 and multiplying by 0.002 (to correct for the timestep), the following diffusion coefficients were obtained:

| 8,000 atom system, | 1,000,000 atom system | |

| Lennard-Jones vapour | 8.45 | 3.27 |

| Lennard-Jones liquid | 0.0979 | 0.0901 |

| Lennard-Jones solid | approx. 0 | approx. 0 |

The largest source of error when determining for the two systems is that it is assumed they take the same amount of time to converge. This is particularly prominent in the case of the vapour phase where you can clearly see that the simulation run with 1,000,000 atoms has converged much more considerably than the one run with 8,000. The values for also show the least agreement, which reflects this error.

As before, the diffusion coefficient for both solids equates to approximately zero, employing the same rationale as with the MSD.

The two methods for determining are in reasonably good agreement with each other.

JC: Good thorough treatment of units so that diffusion coefficients can be compared. Does the trapezium rule introduce a significant error?