Draft:Y1 python data analysis article draft

Data Analysis for Chemistry with iPython Notebooks

Clyde J. Fare, João Pedro Malhado, Andrew W. McKinley, Michael J. Bearpark

Abstract

We proudly present...

Background AWM

Data is everywhere; it is central to the physical sciences with research becoming increasingly data driven. In the public eye, large central facilities such as Diamond, ESRF and CERN deliver vast quantities of data, from which groundbreaking results may be discerned, frequently capturing the public imagination. It is thus becoming increasingly important that scientists in the 21st century are able to access and process the data that is generated in the course of experiments. ESTIMATE OF DATA FLUX AT KEY FACILITY? AWM to investigate. Morgane: data collection and post-processing from LCLS experiment. Data stores, accessing data from scientific research

We are entering an era of Big Data [citations from media?], yet our students (and many staff!) only know standard spreadsheet programs (Excel, google sheets). Spreadsheets are excellent for many things, but they don’t scale to vast data sets - what are initially seen as strengths for smaller problems become their limitations

Today, there is a widespread drive to increase coding skills: coding has entered the primary school curriculum[REF: national curriculum], increasing numbers of students are choosing to study ICT at GCSE and A-level [SOURCE], and it is broadly acknowledged that literacy in coding is likely to become as essential to the 21st century skill-set as reading and numeracy transformed the 20th century. [REWRITE, Quote for coding “essential skill - we’ll wonder why we didn’t teach it sooner”]. 2014 was declared the “Year of Code” by the UK government as a drive to increase the uptake in coding.[CITE], and initiatives such as ¿code camp? have also served to promote the public profile of programming.

We have an emerging dichotomy in our society, in which a child of 10 years old is starting to have an interest in coding, and even an ability to code (driven by initiatives such as the Raspberry Pi Foundation and the ever-decreasing cost of microcontrollers such as the Arduino platform), a student of 20 years old in the physical sciences may not know any coding, while both exist in a society where they are routinely using data-based technology. Increased computer and data literacy is fast becoming a necessity. Do we have a lost generation? Those who remember the BBC microcomputer in schools are in their mid-30s, while the next accessible platform facilitating coding has not been available until the introduction of low-cost ‘credit-card computers’ such as the Raspberry Pi.

In our experience of teaching chemistry in a UK institution we are aware of the need for coding in our discipline; coding education has been prevalent in chemistry curricula (in one form or another) since computers became accessible to universities (CITE). More recently, initiatives have been taken in this institution to teach programming in context [TEMPLER], however the course was discontinued owing to a number of practicalities, including the challenges of using a proprietary language (smaller community, impracticality of self-study), the difficulty in training support staff and a failure to keep the content of the course relevant to the rest of the programme of study.

Over the past five years in our department there has been an increase in the appetite for coding among students and staff alike (vide supra). In 2014 we took the decision to revamp our teaching of physical chemistry, and this provided an opportunity to reintroduce programming to our curriculum.

Redesigning the physical chemistry curriculum Data analysis workshops/excel/matlab (group discussion based workshops). Reintroducing programming

- 15 years previously, taught mathematica [Templer published article]

- unpopular with students

- difficulty in training demonstrators, students with no experience of coding did not see relevance

¿HSR taught a Fortran programming course? Why did he/we stop? AWM to investigate.

The idea of implementing a programming curriculum was supported by many staff - not just those who feel strongly about it. Lab coordinators had concerns about students’ ability to work with data: collecting it, understanding potential sources of error and plotting where necessary. Finding an extensible tool to address problems working with data was the first step. Independently we had also developed a programming workshop for 2nd year students specialising in Chemistry With Molecular Physics. While the first goal of the course presented here was to improve students ability to work with data, the second goal was to reintroduce programming: not just for students who might be expected to be enthusiastic, but for everybody.

Within the department we have always taught a short course in data analysis as part of our theoretical methods series; this had undergone various iterations and delivered via a number of different techniques, from lectures supported by a workshop using a spreadsheet to analyse data, and lately an approach which replaced the spreadsheet exercise with a MatLab exercise to carry out similar exercises. We observed poor retention of knowledge from this short course, and consequently we saw that it was in need of rejuvenation. Thus it was natural to combine our programming and data analysis exercises, with each supporting the other in student learning. Following a successful trial with first year students in January 2014, the theory of data analysis is now taught through a group-tutorial style workshop with a staff facilitator; the key aims of this are to challenge any misconceptions (e.g. significant figures, scientific notation and rounding) through to introducing new areas such as estimation of uncertainties and error analysis. This served to introduce the essential concepts to a class for the practical exercises of data handling and analysis.

The goal of this workshop was to introduce an exercise grounded in programming to all of our students, based on a specialised workshop for a small number of students developed the previous year. This is to help students become 'data literate' and better equipped to solve problems relating to data, measurement, errors, and ultimately design of simulations and experiments.

Challenges

In the design of the course, a number of challenges were immediately apparent. The first of these was to account for the likely differing levels of experience of coding among students - there is a risk that those with experience (whether formal taught experience at school or through self-directed learning through hobby activities) may be seen to have an advantage (fair or otherwise) over those without the experience. Experience from earlier programming exercises in the department showed that whatever the nature of the course, the material had to be directly relevant to the study of chemistry; without this there is a risk that students would not apply their knowledge gained on this course. For these reasons the course was deliberately designed to not teach a given language; i.e. the coding and the programming language were not a primary learning outcome. Rather the course was designed to replace the existing data analysis workshops, using the programming language as a means to automate the process of analysis. This was intended to address the challenges above - those with pre-existing experience might have an advantage with the coding, they still had to learn to apply it in a practical context, and because the language was used in a directly applicable manner the material was kept relevant to the exercises the students undertake in the undergraduate teaching laboratories.

There is also a challenge in the coding experience of the staff - while staff are aware of the benefit of coding and were supportive of this initiative, those staff who routinely use coding do so at a high level and in disparate languages. The choice of language was key to the implementation of the course, and the decision was between using MatLab or Python; Python was chosen as the candidate for the reasons outlined below in SECTION.

Once the key design decisions had been made (vide infra), course prerequisites were established so that the course could start at the appropriate level. As a first year course, the number of prerequisites is low, however they are key to the success of the course. Firstly we require that students have A-level maths for the calculus used in the workshop; as this is an admission requirement for our courses it is a formality, however we stress that students should revise this content. The second requirement is completion of the data analysis workshop in week 3 of the term so that the theory behind the analysis exercises has been covered.

Stagnation of knowledge - staff with the knowledge to teach a language?

- Experience - not all students have experience with coding - equality of access and assessment

- Breadth of languages - what language to select and why?

- Resources - availability of equipment

- Ensuring relevance within the context of chemistry

- Identifying appropriate course prerequisites.

Major challenge: implementing the workshop. There was nothing available we could re-use off the shelf. The number of sessions available for the course wasn’t clear until shortly before the course was due to run: in the end, more were booked than expected. One part of the course did not show up [Ian - orbitals section]. This had an impact on designing assessments for the course [see SECTION X]: the challenge was to implement and test in time.

Aims and objectives

The course was designed to address the data analysis needs students are faced with during the degree and increasingly in the job market. Although it is usually expected of students to be able to quantitatively analyse and present their results, the techniques to do so are often not taught.

The approach followed was to present the data analysis techniques that would be of immediate use in the context of undergraduate physical chemistry laboratories, but also present tools that would have scope of progression to more advanced analysis and use cases. In this context, our choice of platform resided in an interactive platform (the iPyhton notebook) with and associated programming language (Python) in detriment of a more traditional and limited spreadsheet.

Our main Intended Learning Outcomes were thus very practical in nature

- Process and import data as it would be produced by a digital instrument.

- Adequatly plot data (including line plots, scatter plots, error bars, logarithmic scales, histograms, 3D plots).

- Use descriptive statistics to characterize data.

- Linear fits (including parameter uncertainty estimates, residual analysis, statistical weighting of data points and regions of non-linearity).

- Non-linear fitting to an arbitrary functional form.

- Apply techniques learned through the course to new data sets.

In pursuing these immediate practical goals, the students is probed to develop a more structured way of thinking about data analysis and problem solving.

The development of such “higher level” goals is naturally not limited to a single course, and a secondary goal of our course, which is also implicit in our choice of working platform, it to open the way to subsequent courses in computer programming, which will further explore the “structured thinking” paradigm. It is important to stress that while teaching programming is a natural possible extension of our course, this is deliberately not a course on computer programming or the Python language.

Design of materials

Although the above mentioned Intended learning outcomes could be achieved with a traditional spreadsheet program, we didn’t want to teach merely a specific tool or one way to accomplish a task. And if indeed the choice of a given software platform poses some restrictions on the way a given problem is addressed, our choice was to use a platform that would be as extensible as possible, which would allow students to address their current data analysis needs but also any advanced use in their research and professional careers. By taking this approach we wanted to avoid the overhead of learning a software tool that would be sufficient to the student immediate needs, only to repeat the process later on when such tool proves too limiting.

With extensibility in mind, the chosen platform would need to be associated with a programming language. It should also be interactive, suitable for working with data in real-time and allowing for a quick visualization of results. There are several computational packages that could fulfil these requirements, such as Mathematica from Wolfram Inc., MATLAB from Mathworks, or GNU Octave , Scilab . Although very capable and with large user bases in academia and industry, the associated programming language is in each case package specific with a single implementation and prone to vendor lock-in. Our choice fell on the IPython Notebook (http://ipython.org/notebook.html) platform, the Python general purpose language and associated libraries. Python is a general purpose interpreted programming language with considerable momentum and resources [online documentation, books, libraries and downloadable code] behind it. Increasingly it’s the first choice for data analysis projects both large and small, as well as the language of choice for teaching programming at university level (http://cacm.acm.org/blogs/blog-cacm/176450-python-is-now-the-most-popular-introductory-teaching-language-at-top-us-universities/fulltext). (FOOTNOTE: Python’s large user base also proved important in recruiting knowledgeable demonstrators to deliver the course, as we had almost 180 students,divided into three groups of almost 60.) The IPython notebook (http://dx.doi.org/10.1038/515151a) provides a multimedia interactive interface to the Python language, that runs on a web browser. It offers the possibility to execute code and immediately see its result, making it ideal to experiment, formulate hypotheses and testing ideas. The notebooks also combine code, output, figures, text and mathematical formulas in one single document. These features make it useful for student use as a replacement/complement to a physical notebook, but also for the creation of course material as described below. Other IPython courses are appearing now [REF], some much more in-depth. This also supports our choice of learning environment. The choice of the IPython notebook platform has also the advantage of being free software, it is widely available, there are no licensing restrictions, and students are able (and encouraged to) install it in their own devices. This point is not simply a matter of convenience (monetary or otherwise), it enables students to transferably apply the skills learned in different contexts and the formulation of innovative solutions. For example, the Python stack is available for the Raspberry Pi, which was used in a subsequent experiment building an optical spectrometer with lego [REF].

By exposing students to such a general platform and associated programming language, we were faced with the task to tame and target this power to deliver a first year course that would address the Intended Learning Outcomes in data analysis without overwhelming the students. We thus took the approach of moving away from teaching the basics of the Python programming language, but selecting the minimum necessary to perform the task at hand and using available libraries to create an abstraction from the details of the computing platform. (FOOTNOTE: The heavy use of external mathematical, numerical and graphic libraries made us choose to use the the pylab environment (%pylab inline). This greatly simplifies syntax and avoids introducing the concept of external library and the mechanisms to load them at an early stage, even if it is a questionable choice from the perspective of teaching good programming habits (http://carreau.github.io/posts/10-No-PyLab-Thanks.html).) These approach resonates with Christian Hill’s [Author of forthcoming CUP book ‘Learning Scientific Programming with Python’] ideas , who wondered if a course could be developed based around SciPy and NumPy libraries. In making these choices there is a balanced to be reached: introducing limited syntax whilst giving the students a consistent mental model of how the syntax works. Too much introduction and explanation of syntax increases cognitive load and dispirits students, not enough means students do not understand what they are doing so cannot generalise from the examples (e.g. can plot y vs. x, but only if the variables happen to be named x and y).

We chose to base the course on the NumPy array data structure, instead of more conventional structures in Python such as lists. This presented many advantages: arrays in Python are vector-like data structures, data can be stored in arrays, and accessed with convenient indexing mechanisms. The use of arrays allows for operations of the form

y=m*x+b

where a and y are collections of data, with m and b scalars without the need to invoke loop programming constructions. Whilst aspects of vectorised data structures often taught at a more advanced stage here were introduced as core from the beginning.

By design we thus decided not to introduce two fundamental (and potentially complex) constructions in programming: loops and conditional/if statements.

Conversely we did introduce other important concepts in programming, some of which have close analogues in mathematics: variables , arrays (indexing, filtering) , modules , functions (calling pre-existing, defining new) , reading/writing files. The introduction of these concepts anticipates a more formal course in programming at a later stage.

The course was organized as a self-paced learning exercise, where each student work individually at a computer following interactive notebooks that offered text contextualization of the tasks, as well as command inputs and respective explanation, that students were invited to type, explore, at occasions extend, and inspect the output obtained.

We chose the programmed workbook approach because learning to write code is like learning a new language or a musical instrument: it’s a skill that’s developed by experimenting, trying ideas out, thinking about what works and what doesn’t, and asking for help.

Our intended learning outcomes have been loosely aligned to the SOLO taxonomy (Biggs 1999); the criterion referenced nature of this assessment means that the learning outcomes may be fulfilled through completion of the workshop, including the exercises. As feedback to students is immediate through the programmed learning approach, they are able to see for themselves - in real time - their successful completion of the learning outcomes.

Implementation - Running of the class/workshops

Testing - only the 1st workshop was tested by staff and student demonstrators. This was not ideal. Other workshops were tested by the two developers swapping parts of the notebooks. Demonstrators had worked through the exercises, but these were near-final versions as we were close to running the lab.

We had timetabled 6 x 3 hour slots. We developed 5 notebook based sessions and used the sixth session as a spill-over session for students to complete work they had not managed to complete in the first 5 sessions. The first session format involved a 30 minute introduction and explanation of the course form and content. Subsequent sessions included a 10 minute presentation highlighting the key areas already covered that would be reused during the session.

The first three notebooks introduced material alongside some short exercises. The final two were entirely exercise based and were designed to reinforce the material covered in the initial three in a form that was relevant to the analysis of chemical data.

The first workshop introduced the IPython notebook platform basic mathematical expressions, variables and the core data structure made use of throughout the workshops: arrays of one and two dimensions. Following their introduction we covered 2d line and scatter plots of array data before going through the options for customising plots to a degree necessary for production of publication quality figures.

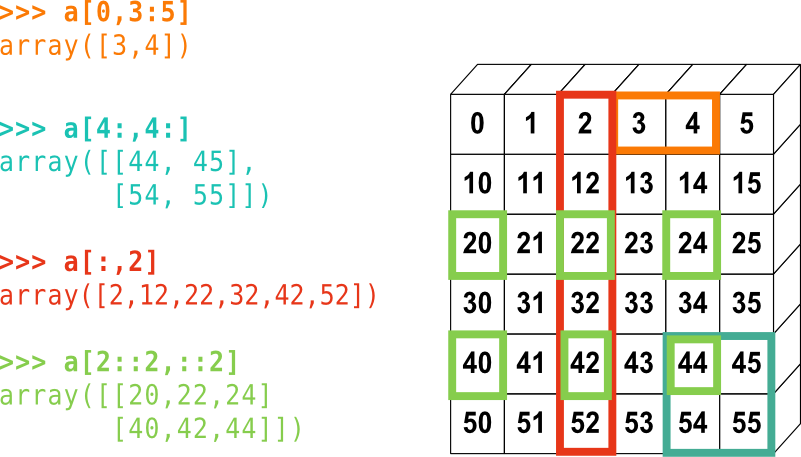

The following figure taken from [reference to scipy lectures] was used to summarise a key concept: accessing elements within arrays:

The second workshop recapped and expanded upon the elements introduced in the first before introducing reading and writing data, computing statistics from arrays fitting linear functions to data. The third workshop introduced modules, functions, fitting non-linear functions to data and using the notebook for interactive visualisation. The first exercise notebook consisted of two exercises. The first required loading and manipulation of a large data set (>5000 data points) of the type that shows off the advantages of the Python based approach vs. off the shelf spreadsheets. It required the students load files nominally representing noisy measurements from some unspecified measuring device and statistically analyse this data. This required loading and plotting data, selecting subsets of data, and computing and storing statistics for these subsets. The second exercise involved fitting chemical kinetics concentration vs. time data. This required them to plot data, define their own concentration vs. time function and make use of the non-linear fitting machinery.

The second exercises notebook again consisted of three exercises the first required the students to determine the concentration of glycine from chromatogram data of a sample of ice. This required them to define functions, use non-linear fitting and compute the area under a curve. The next two involve fitting multiple gaussian functions to a emission spectra

Assessment

Through the course design phase one of the key decisions we made was regarding assessment, indeed, how to quantify the assessment of this course. In using the programmed learning approach the students undertake bite sized tasks to guide them through the application of the programming to a test data set. Through doing this, automated feedback is delivered immediately, whether in the form of ‘error codes’ - which are reasonably user-friendly - (ERROR FIGURE) or in the form of a successful return (a plot, returning a matrix etc.). The student then has the opportunity to revisit the task immediately and try to work out “what went wrong”. The experience of running the class however showed that this had the potential to cause confusion among some students - because of the programmed learning approach, it was possible for students to blindly follow through the initial tasks of the exercise without giving much thought to the process of constructing their code, however by not engaging in these preliminary steps students are not able to complete the exercises at the end of each script and consequently are required to revisit the earlier material to find out what they need to complete the script. (FOOTNOTE: There is also the need for students to follow this material through in order to fully participate in the assessed components of the physical chemistry laboratory, thus through not engaging in this material there is a delayed consequence in future summatively assessed exercises.)

The tasks in this class are self guided by design and, as a consequence, the learning outcomes are directly satisfied through completion of all the tasks. Consequently our main concern was to ensure that 1) the students completed the tasks, and 2) that the students completed the tasks themselves - or at least through appropriate collaboration with colleagues. For this reason, we felt it important that students undertook the exercises in a workshop environment - this way we were sure that students had completed the tasks appropriately, and that they had support from trained demonstrating staff to explain error codes and how to ‘debug’ their code.

Students were not directly assessed in a summative manner through the course of this work; as the material forms the foundation for future courses [JE work] it functions as a training prerequisite for the completion of the physical chemistry laboratories. While we are aware that some (not all) students used their new skills in Python to analyse data in the first year physical chemistry laboratory, it was an option for 2014 but not a requirement at that point. The assessment criterion therefore was decided to be a completion point; students that successfully completed all the tasks were deemed to have passed the course. For this, students submitted their completed workbooks at the end of each session to register attendance and completion of the tasks. (FOOTNOTE: The experience of running the class showed that either by accident or intent, some students chose to hand in empty notebooks; the reasoning for this is unclear. This however was straightforward to identify and remedial action was taken.)

180 x 5 iPython notebooks - don’t want to mark these: want to provide feedback in real time. Also don’t need the marks, and wanted to encourage students to complete material we think is vital for them without getting a little piece of cheese at the end of the corridor. [General course redesign goal].

Because of concerns that students would not attend classes which were not assessed in some way, we created a scheme to assess attendance: students had to hand in their completed workbooks at the end of each session to register their attendance, and they were advised that this was assessed. Predictably, some chose to hand in empty notebooks: however, it was straightforward to test for this.

Evaluation

Through the running of this course, a great deal of feedback was received; including direct feedback in terms of the student reception to the material during the workshops and the formal feedback mechanism (SOLE: Student OnLine Evaluation) at the end of the term. Feedback received was mixed, with some excellent and extremely encouraging comments, some broadly positive comments including some constructive criticism through to a few comments which are wholly negative. The student responses broadly align with our own experience of running the class, with the main point of criticism being the timetabling of the course as six 3-hour sessions.

Student responses

Immediate

Pain points:

The notion of variables, some students struggled with the arbitrary nature of variable names. One manifestation of this occurred in plots where students attempted to plot an undefined x variable confusing the x-axis with an assigned variable named x

Many students found grasping the concept of arrays of numbers and accessing elements of those arrays difficult. This proved a stumbling block to further progress as arrays were used throughout the notebooks. An example of this was in the histogram part of exercise 1. When they made the histogram they were making a single histogram and passing it the entire 2d array instead of making several calls to the histogram function and passing in the individual columns.

Plotting mathematical functions vs, plotting data. Students confused the abstract notion of an equation with discrete numerical data defined by that equation. Plotting in Python requires the former whilst students typically think in terms of the latter. The confusion can be seen in the following code snippet.

y=mx+c plot(x,y)

This works only if x is a previously defined array and m and c are previously defined numbers. However on first glance it appears as if we are simply defining and plotting an equation. (This is exacerbated by use of the linspace/arange functions to automatically generate arrays of numbers) [Solns: We proposed students look at the contents of the variables were plotting before they plotted them to reinforce the notion that the ‘x’ variable is an array of numbers not an abstract symbol]

When definig functions, both the function domain and parameters enter in equal footing, still they are treated differently when using curve_fit: we have to define a function that takes as arguments the domain of the function followed by the parameters (parameters that are not known).

Fitting. The process of fitting involves determining parameters that make an equation best fit the presented data. The results from this process are the fitted parameters. These must then be used to generate data corresponding to the fitted curve/line which can then be plotted. Students struggled with the distinction between the parameters being produced by the fitting machinery and the final plot of the curve/line.

The first exercise of the exercise session 1, was a guided exercise plotting hitograms ans showing the relation between statistical distributions and discriptive statistics quantities such as the mean, standard deviation and standard deviation of the mean. This was a challanging exercise in practice, taking a long time to complete. Most students don't grasp the relevant statistical insight of the exercise.

Workshops are too long, students were noticeably tired by the end of the sessions and we could see this was impeding their ability to take in new information and lowered their experience of the workshops. This was particularly evident during workshop two where the key concepts come at the end of the workshop leading to the students feeling frustrated.

The spill-over session was attendend by usually by about half of the students, usually not the weaker ones. This shows that students were giving up.

The fact that the workshop materials are self driven means the onus was on the students to read through and attempt to understand the material presented. However in some cases we observed a resistance to engaging with the learning process. This manifested in a spectrum of attitudes, ranging from unquestioningly following the material on the notebook, to the refusal of reading instructions and only typing commands. This problem became evidence when the students came to the exercise sessions and realised they couldn’t do anything. [Solns: During introductory talk emphasised not simply copy pasting, trying to predict the results of their code cells before they execute them They need to know that if they go through without reading or understanding they will not survive exercise 1.]

Many students were surprised and challenged when asked to determine a simple derivative: force as derivative of potential (given the expression for this) - plot both. Not a numerical evaluation of the derivative. For some this was no problem. For others it was a problem to be avoided. Evaluating the derivative wasn’t done within the notebook environment - expected a short detour to solve on paper, caused some surprise / dissonance. Some students chose to use the Wolfram Alpha environment for this. Needing to think required a jump from following the script - this was deliberate. Also suspect there was a concern with evaluating a derivative with r not x - again, presenting this challenge was deliberate. [THRESHOLD CONCEPT - irreversible ‘aha’ moment. Meyer and Land 2003] Calculus doesn't just work in terms of y and x: this isn’t a problem with Python itself.

Interestingly a possible and valid approach to the use of the notebooks, followed by a small minority of students, is to directly attempt to solve the exercises at the end of the session, and selectively read the notebook material which allows them to solve the exercise.

Reflective (SOLE comments) - distilled into a summary; add some of the best quotations, both positive and negative

Feedback was split: many positive, enthusiastic and supporting comments; a few detractors who were negative about the whole experience. Major concern - 3 hour length of workshop sessions. It’s unrealistic for someone to concentrate at this level of intensity for 3 hours in first term - we agree; this was a timetabling constraint (time + space availability) Too much to learn in these workshops - fair point. Want more exercises, spread out through the workbooks - we agree. Concerns about lack of feedback: misunderstanding of what feedback is. The notebook environment means there is always feedback on whether a particular task unit has been successfully completed or not, and demonstrators to ask for help where there are misunderstandings. Feedback is not just a justification for a mark received, a point that’s repeatedly emphasised [AM to update this: useful for the future].

“Really clear workbooks, easy to understand, interesting tasks, demonstrators really helpful and allowed us to stretch our knowledge. Really enjoyable”

“During the sessions I completed the tasks and attempted the exercises but having left the exercises I feel as if I haven’t learned anything”

“The sessions were not helpful, in the workshops we were just retyping instructions”

“The self study method of working through workbooks individually was not particularly effective”

Some similar concerns with previous data analysis workshop: not unique to python.

Staff responses

Evaluative

Reflective

Concluding remarks/¿refinements?

As the students struggle with statistical aspect of exercises 1 we could pull out the statistics into a seperate workshop. E,g, workshop 2 could just be statistis then workshop 3 would be linear fit, etc.

Something like 30-40% of students did not really gain much from the course. Though 20% gained a great deal. The course is not helped by the fact that numerous concepts that are expected to be trivial and known before are unknown (mathematical variables, functions, histograms, standard deviations), these increase the cognitive load (which is already too high).

I think there is a general need for a greater degree of repetition, the inbuilt help functionality should be repeatedly used, to get them used to it. The process of looking at variables to check what they are should be repeated, the nature of the fitting process as mentioned above could do with some repetition. We have repeated array indexing and plotting a lot and that has helped

To help students refocus, interrupt the class to highlight the points being made in the exercise

Get solace from the failure from others who have seen a significant proportion of computer science students fail to cope with programming in any meaningful way.

Supplementary information

The course material is available under Creative Commons license. Release v1.0 represents the course as it was 2014. Github link: [1]

Acknowledgements

Oliver Robotham Niall Jackson Paul Wilde ¿External discussions?